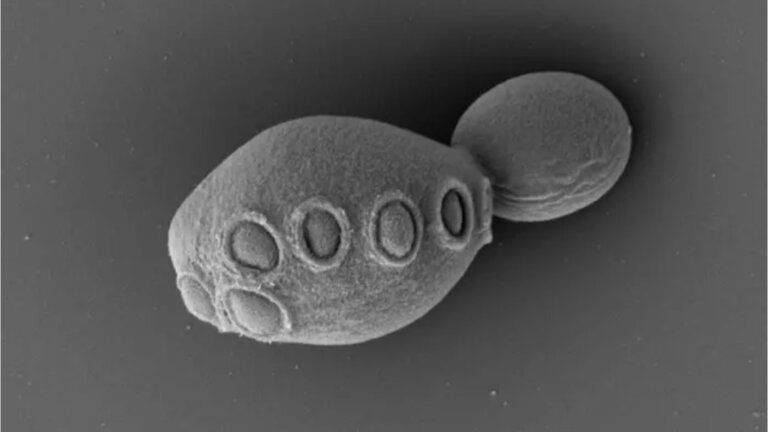

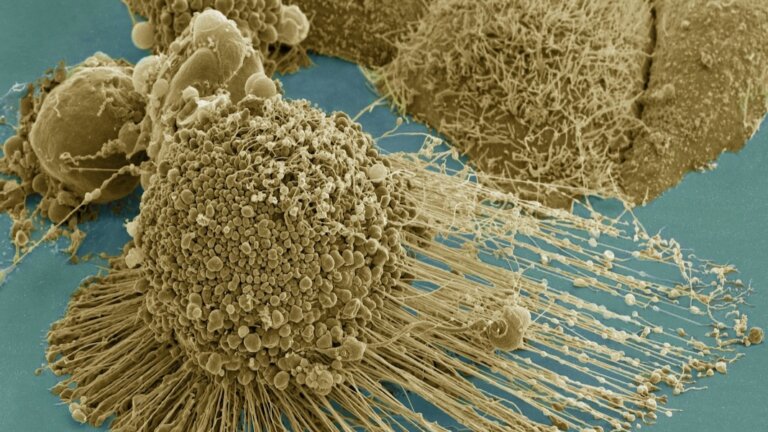

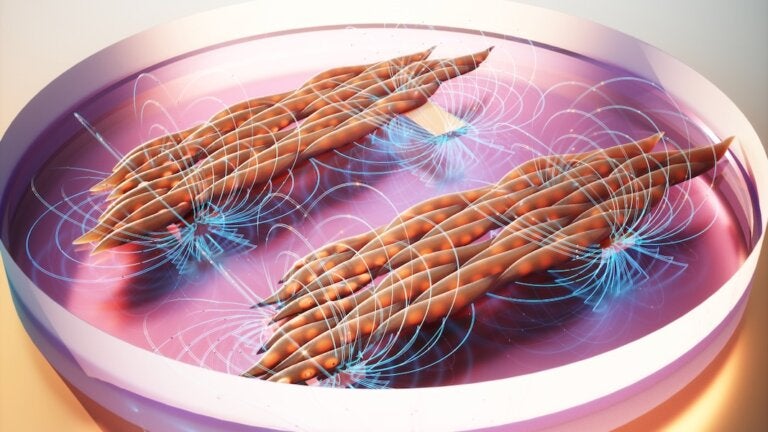

Our ability to manipulate the genes of living organisms has expanded dramatically in recent years. Now, researchers are a step closer to building genomes from scratch after unveiling a strain of yeast with more than 50 percent synthetic DNA.

Since 2006, an international consortium of researchers called the Synthetic Yeast Genome Project has been attempting to rewrite the entire genome of brewer’s yeast. The organism is an attractive target because it’s a eukaryote like us, and it’s also widely used in the biotechnology industry to produce biofuels, pharmaceuticals, and other high-value chemicals.

While researchers have previously rewritten the genomes of viruses and bacteria, yeast is more challenging because its DNA is split across 16 chromosomes. To speed up progress, the research groups involved each focused on rewriting a different chromosome, before trying to combine them.

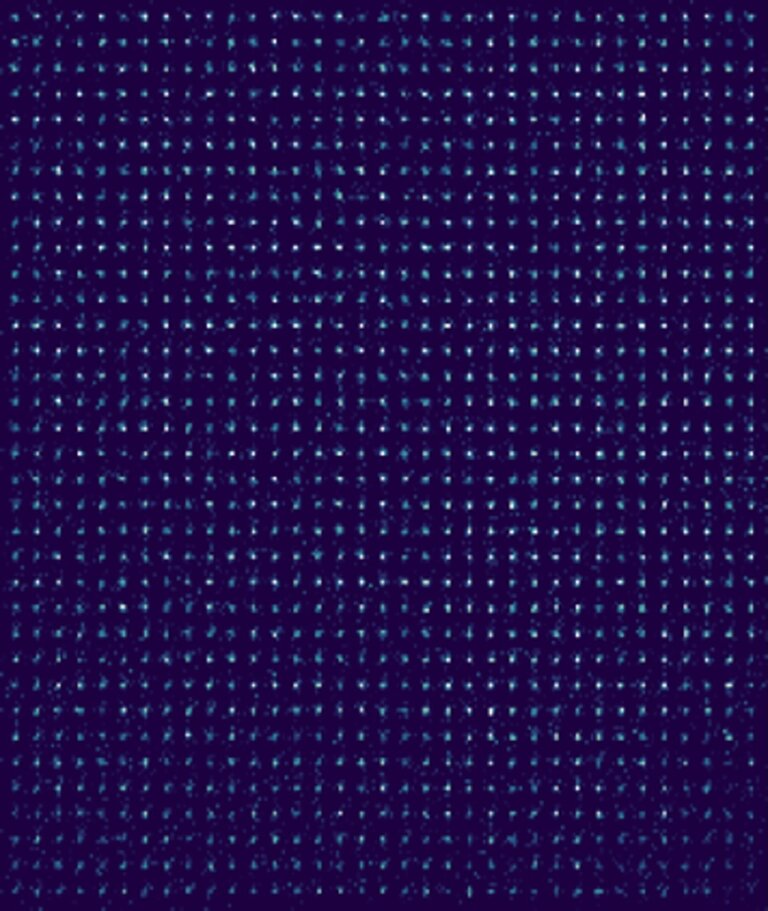

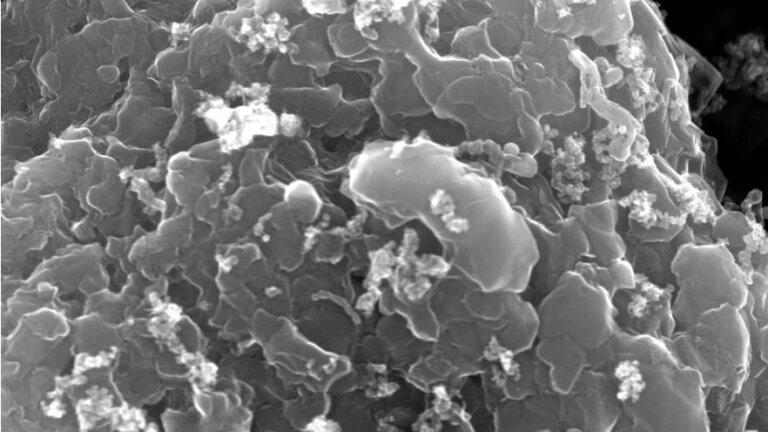

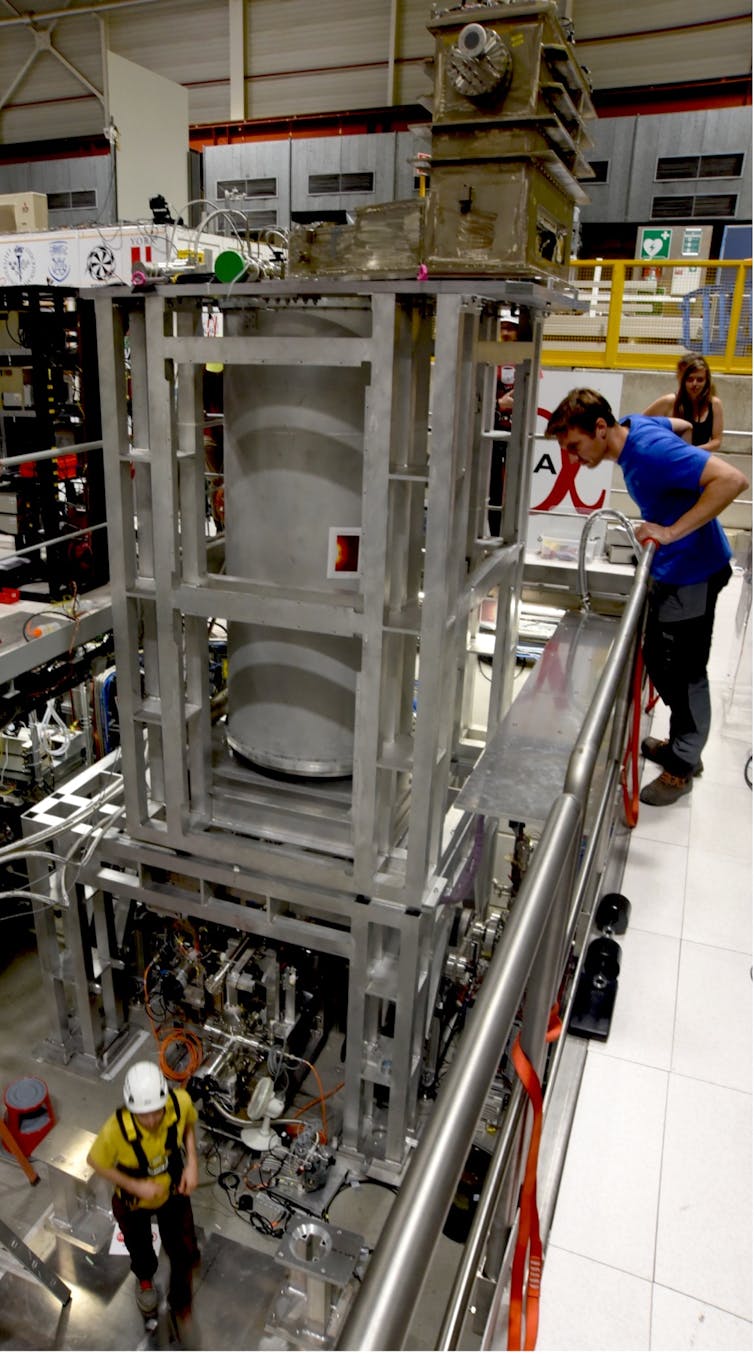

The team has now successfully synthesized new versions of all 16 chromosomes and created an entirely novel chromosome. In a series of papers in Cell and Cell Genomics, the team also reports the successful combination of seven of these synthetic chromosomes, plus a fragment of another, in a single cell. Altogether, they account for more than 50 percent of the cell’s DNA.

“Our motivation is to understand the first principles of genome fundamentals by building synthetic genomes,” co-author Patrick Yizhi Cai from the University of Manchester said in a press release. “The team has now re-written the operating system of the budding yeast, which opens up a new era of engineering biology—moving from tinkering a handful of genes to de novo design and construction of entire genomes.”

The synthetic chromosomes are notably different to those of normal yeast. The researchers removed considerable amounts of “junk DNA” that is repetitive and doesn’t code for specific proteins. In particular, they cut stretches of DNA known as transposons—that can naturally recombine in unpredictable ways—to improve the stability of the genome.

They also separated all genes coding for transfer RNA into a completely new 17th genome. These molecules carry amino acids to ribosomes, the cell’s protein factories. Cai told Science tRNA molecules are “DNA damage hotspots.” The group hopes that by separating them out and housing them in a so-called “tRNA neochromosome” will make it easier to keep them under control.

“The tRNA neochromosome is the world’s first completely de novo synthetic chromosome,” says Cai. “Nothing like this exists in nature.”

Another significant alteration could accelerate efforts to find useful new strains of yeast. The team incorporated a system called SCRaMbLE into the genome, making it possible to rapidly rearrange genes within chromosomes. This “inducible evolution system” allows cells to quickly cycle through potentially interesting new genomes.

“It’s kind of like shuffling a deck of cards,” coauthor Jef Boeke from New York University Langone Health told New Scientist. “The scramble system is essentially evolution on hyperspeed, but we can switch it on and off.”

To get several of the modified chromosomes into the same yeast cell, Boeke’s team ran a lengthy cross-breeding program, mating cells with different combinations of genomes. At each step there was an extensive “debugging” process, as synthetic chromosomes interacted in unpredictable ways.

Using this approach, the team incorporated six full chromosomes and part of another one into a cell that survived and grew. They then developed a method called chromosome substitution to transfer the largest yeast chromosome from a donor cell, bumping the total to seven and a half and increasing the total amount of synthetic DNA to over 50 percent.

Getting all 17 synthetic chromosomes into a single cell will require considerable extra work, but crossing the halfway point is a significant achievement. And if the team can create yeast with a fully synthetic genome, it will mark a step change in our ability to manipulate the code of life.

“I like to call this the end of the beginning, not the beginning of the end, because that’s when we’re really going to be able to start shuffling that deck and producing yeast that can do things that we’ve never seen before,” Boeke says in the press release.

Image Credit: Scanning electron micrograph of partially synthetic yeast / Cell/Zhao et al.